from linear_regression import LinearRegression

import numpy as np

from matplotlib import pyplot as plt

def pad(X):

return np.append(X, np.ones((X.shape[0], 1)), 1)

def LR_data(n_train = 100, n_val = 100, p_features = 1, noise = .1, w = None):

if w is None:

w = np.random.rand(p_features + 1) + .2

X_train = np.random.rand(n_train, p_features)

y_train = pad(X_train)@w + noise*np.random.randn(n_train)

X_val = np.random.rand(n_val, p_features)

y_val = pad(X_val)@w + noise*np.random.randn(n_val)

return X_train, y_train, X_val, y_valLink to code: https://github.com/jchung2020/jchung2020.github.io/tree/main/posts/linear_regression

Linear Regression

Linear Regression Algorithms take our data, encoded in the usual feature matrix \(\textbf{X}\), and a target vector \(\textbf{y}\) of values, and creates a prediction for these values.

The goal of this blog post is to compare the analytic and gradient methods of Linear Regression, implement LASSO regularization, and implement our algorithms to some data.

Analytic and Gradient Methods

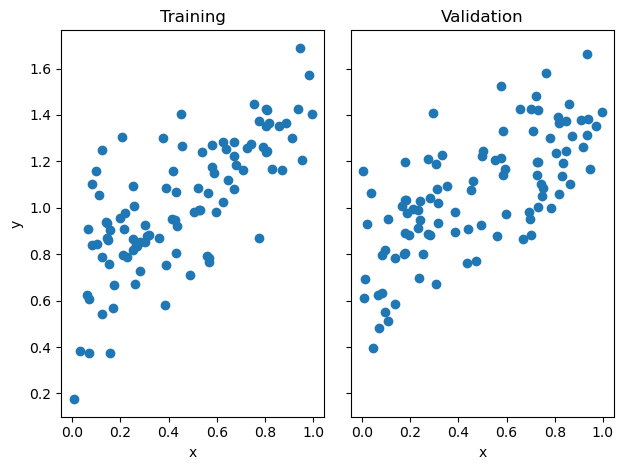

As usual, we start by getting our data. Notice here we have generated the training and validation data randomly. We generate a random weight vector, and with some noise, some data around that weight vector.

%load_ext autoreload

%autoreload 2For simplicity, we start with 2D linear data.

n_train = 100

n_val = 100

p_features = 1

noise = 0.2

# create some data

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

# plot it

fig, axarr = plt.subplots(1, 2, sharex = True, sharey = True)

axarr[0].scatter(X_train, y_train)

axarr[1].scatter(X_val, y_val)

labs = axarr[0].set(title = "Training", xlabel = "x", ylabel = "y")

labs = axarr[1].set(title = "Validation", xlabel = "x")

plt.tight_layout()

The analytic method uses matrix multiplication. As long as \(\mathbf{X^{T} X}\) is invertible, it is possible to find exact solution that will minimize the linear-regression loss. This, however, is computationally costly. If there are \(p\) features and \(n\) data points, the algorithm will take time \(O(np^2)\).

LR = LinearRegression()

LR.fit_analytic(X_train, y_train) # I used the analytical formula as my default fit method

print(f"Training score = {LR.score(X_train, y_train).round(4)}")

print(f"Validation score = {LR.score(X_val, y_val).round(4)}")

print("Weight vector: ",LR.w)Training score = 0.5345

Validation score = 0.4582

Weight vector: [0.74340828 0.67994067]On the other, using gradient descent can be much faster as each step only takes time \(O(p^2)\) and potentially will not need as many steps to achieve a good result.

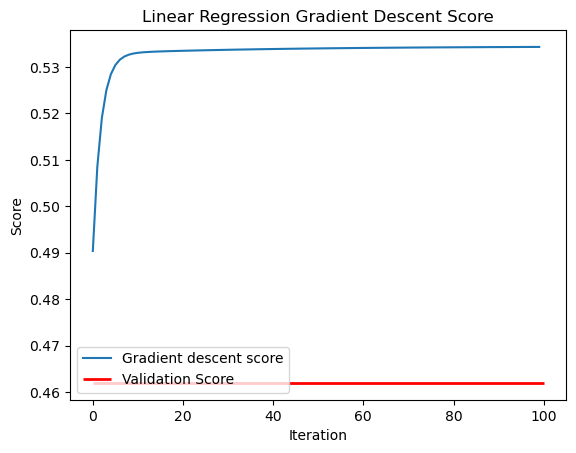

As we can see below, with 100 maximum iterations and a learning rate of 0.001, we achieve a training and validation score that is similar to the analytic algorithm.

LR2 = LinearRegression()

LR2.fit_gradient(X_train, y_train,max_iter = int(1e2), alpha = 0.001)

print(f"Training score = {LR2.score(X_train, y_train).round(4)}")

print(f"Validation score = {LR2.score(X_val, y_val).round(4)}")

print("Weight vector: ",LR2.w)Training score = 0.5343

Validation score = 0.462

Weight vector: [0.73177951 0.68551826]plt.plot(LR2.score_history,label="Gradient descent score")

plt.hlines(y=LR2.score(X_val, y_val), xmin=0, xmax=int(1e2), linewidth=2, color='r',label="Validation Score")

labels = plt.gca().set(xlabel = "Iteration", ylabel = "Score")

plt.legend()

plt.title("Linear Regression Gradient Descent Score")Text(0.5, 1.0, 'Linear Regression Gradient Descent Score')

for i in range(len(LR2.score_history)-1):

if (LR2.score_history[i+1] < LR2.score_history[i]):

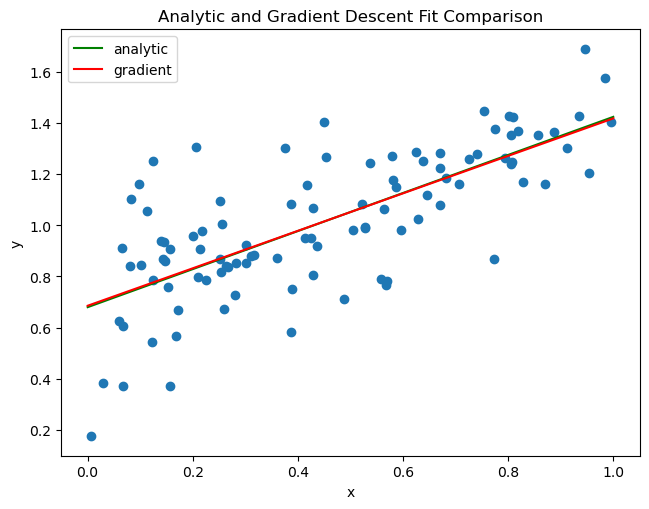

print("ISSUE!")Comparing the fits of the analytic and gradient descent methods, we can see that both are close and appear to follow the trend of the data.

def draw_line(w, x_min, x_max,title,c):

x = np.linspace(x_min, x_max, 101)

#y = -(w[0]*x + w[2])/w[1]

y = (w[0]*x + w[1])

plt.plot(x, y, color = c,label=title)

# plot it

fig = plt.scatter(X_train, y_train)

fig = draw_line(LR.w, 0, 1,"analytic","green")

fig = draw_line(LR2.w, 0, 1,"gradient","red")

#labs = plt.set(title = "Training", xlabel = "x", ylabel = "y")

#labs = axarr[1].set(title = "Validation", xlabel = "x")

plt.tight_layout()

plt.legend()

plt.title("Analytic and Gradient Descent Fit Comparison")

labels = plt.gca().set(xlabel = "x", ylabel = "y")

Breaking Linear Regression

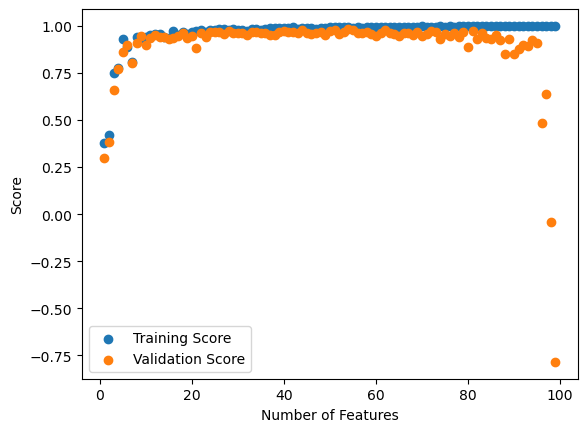

In the above case, we only used 1 feature with 100 data points. However, we can also experiment with the success of the algorithm when we increase the number of features from 1 to 99.

Note that I use the analytic algorithm so that this reflects the limitations of the linear regression algorithm itself.

n_train = 100

n_val = 100

#p_features = 1

noise = 0.2

p_features_list = [i for i in range(1,n_train)]

train_scores = []

val_scores = []

for p_features_test in p_features_list:

# create some data

X_train_test, y_train_test, X_val_test, y_val_test = LR_data(n_train, n_val, p_features_test, noise)

LR_test = LinearRegression()

LR_test.fit_analytic(X_train_test, y_train_test)

train_score = LR_test.score(X_train_test, y_train_test)

val_score = LR_test.score(X_val_test, y_val_test)

train_scores.append(train_score)

val_scores.append(val_score)plt.xlabel("Number of Features")

plt.ylabel("Score")

#plt.scatter(p_features_list[80:95],train_scores[80:95])

#plt.scatter(p_features_list[80:95],val_scores[80:95])

plt.scatter(p_features_list,train_scores)

plt.scatter(p_features_list,val_scores)

plt.legend(labels=["Training Score","Validation Score"])

#print(train_scores)<matplotlib.legend.Legend at 0x7fda025f0130>

Here I have plotted the training and validation scores. So we can see that the training score continually increases, reaching a perfect score of 1.0 at 99 features.

As expected, the training score is always larger than the validation score. We can see that after about 50 features, the validation score drops, dropping to a negative value when close to 99 features are reached. This is a consequence of overfitting. It seems that linear regression naturally overfits if the number of features is close to the number of data points. Perhaps this is not unsurprising since information wise, if we as many features as our data, we can describe our data completely. Of course, this would not predict the trend when we use our training data, hence the overfitting.

Note that a negative validation score means that our fit is worse than just using the average. We are minimizing the loss \(|| \textbf{X}\textbf{w} - \textbf{y}||^{2}_{2}\). But if there are 99 features and 100 data points, really \(\textbf{X}\) is 100 by 100 in dimension (since it is padded by a column of 1s). Hence it could be possible to invert \(\textbf{X}\), a square matrix, which would give a unique solution to \(\textbf{X}\textbf{w} = \textbf{y}\). This solution would minimize loss. This may sound good, but this means that our algorithm perfectly minimizes loss to 0 on the training data, hence the perfect 100% score on the training data and the overfitting or poor score on the validation data.

Fixing Linear Regression with LASSO Regularization

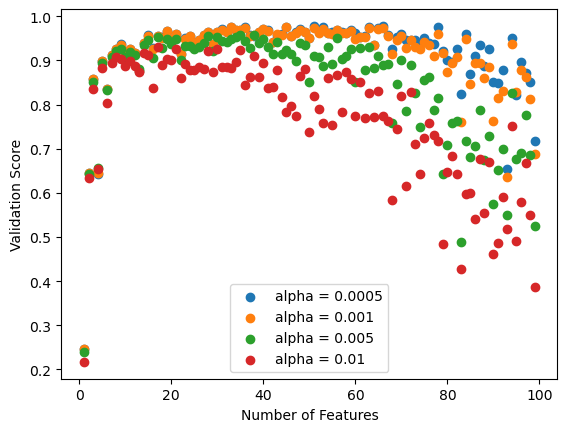

It is not great that we can break Linear Regression, since in some cases we may want to use it when we have a lot of features. We can fix this with LASSO Regularization, an addition to the loss function that will specify the weights to be small or zero in value (I assume LASSO is a play on the fact that it lassoes the weights, keeping them small). This should prevent overfitting by practically reducing the actual number of parameters that are significant to our predictions.

No surprise here, we use sklearn for our LASSO module.

from sklearn.linear_model import Lasso

n_train = 100

n_val = 100

#p_features = 1

noise = 0.2

p_features_list = [i for i in range(1,n_train)]

Lasso_train_scores = []

Lasso_val_scores = []

alpha_list = [0.0005,0.001,0.005,0.01]

train_scores = []

val_scores = []

for p_features_test in p_features_list:

# create some data

X_train_test, y_train_test, X_val_test, y_val_test = LR_data(n_train = n_train, n_val = n_val, p_features = p_features_test, noise = noise)

for alpha in alpha_list:

L_test = Lasso(alpha = alpha)

L_test.fit(X_train_test, y_train_test)

train_score = L_test.score(X_train_test, y_train_test)

val_score = L_test.score(X_val_test, y_val_test)

Lasso_train_scores.append(train_score)

Lasso_val_scores.append(val_score)

LR_test = LinearRegression()

LR_test.fit_analytic(X_train_test,y_train_test)

train_score = LR_test.score(X_train_test, y_train_test)

val_score = LR_test.score(X_val_test, y_val_test)

train_scores.append(train_score)

val_scores.append(val_score)plt.scatter(p_features_list,[Lasso_val_scores[4*i] for i in range(len(p_features_list))]) #alpha = 0.0005

plt.scatter(p_features_list,[Lasso_val_scores[4*i+1] for i in range(len(p_features_list))]) #alpha = 0.001

plt.scatter(p_features_list,[Lasso_val_scores[4*i+2] for i in range(len(p_features_list))]) #alpha = 0.005

plt.scatter(p_features_list,[Lasso_val_scores[4*i+3] for i in range(len(p_features_list))]) #alpha = 0.01

plt.legend(labels=["alpha = 0.0005","alpha = 0.001","alpha = 0.005","alpha = 0.01"])

plt.xlabel("Number of Features")

plt.ylabel("Validation Score")Text(0, 0.5, 'Validation Score')

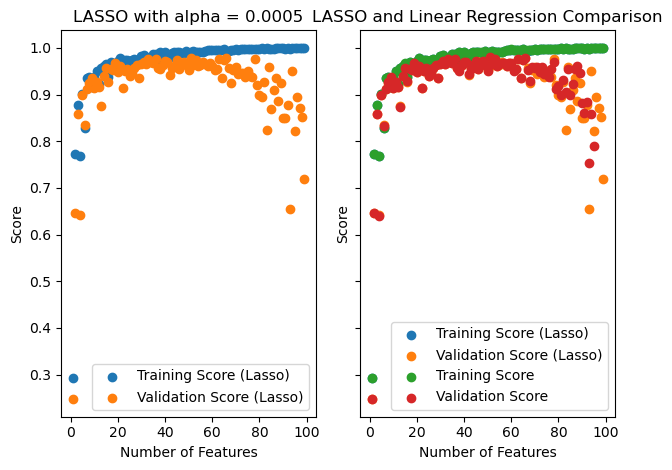

Varying the Lasso algorithm over a variety of alpha or regularization parameterizing values, we see that a value of 0.0005 produces the best validation score.

fig, axarr = plt.subplots(1, 2, sharex = True, sharey = True)

axarr[0].scatter(p_features_list,[Lasso_train_scores[4*i] for i in range(len(p_features_list))]) #alpha = 0.0005

axarr[0].scatter(p_features_list,[Lasso_val_scores[4*i] for i in range(len(p_features_list))]) #alpha = 0.0005

axarr[0].legend(labels=["Training Score (Lasso)","Validation Score (Lasso)"])

axarr[1].scatter(p_features_list,[Lasso_train_scores[4*i] for i in range(len(p_features_list))])

axarr[1].scatter(p_features_list,[Lasso_val_scores[4*i] for i in range(len(p_features_list))])

axarr[1].scatter(p_features_list,train_scores)

axarr[1].scatter(p_features_list[:95],val_scores[:95])

axarr[1].legend(labels=["Training Score (Lasso)","Validation Score (Lasso)","Training Score","Validation Score"])

labs = axarr[0].set(title = "LASSO with alpha = 0.0005", xlabel = "Number of Features", ylabel = "Score")

labs = axarr[1].set(title = "LASSO and Linear Regression Comparison", xlabel = "Number of Features", ylabel = "Score")

plt.tight_layout()

So we can see that the validation score never becomes negative, though it still drops after about 50 features.

I have excluded the validation scores that are negative for regular linear regression, and we can see that LASSO is pretty similar for a large number of features (just not very close to 99). Perhaps it is not much better at controlling for overfitting except for a large number of features.